[ad_1]

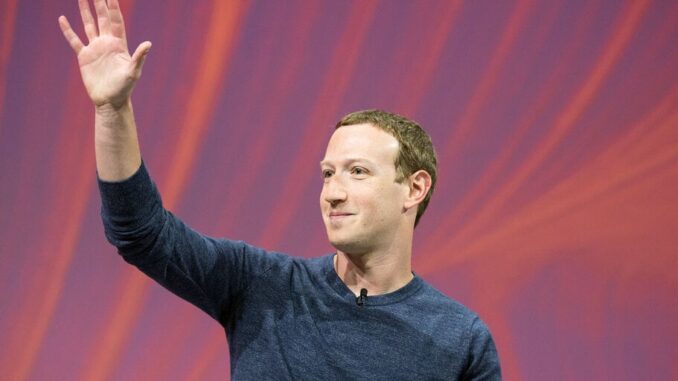

Meta’s ambitions in leading the virtual and augmented reality future has not ebbed, and CEO Mark Zuckerberg sees cutting-edge artificial intelligence technology as a compelling way to make that happen.

Across several media interviews this past week, Zuckerberg has made it clear that he saw recent developments in generative AI as key to maximizing myriad applications in the “metaverse.” In particular, the Meta CEO dialed in on the creation of digital avatars that will one day be embedded across the company’s products.

“I think that’s going to be very compelling and interesting, and obviously, we’re kind of starting slowly on that,” Zuckerberg said in an interview with The Verge on Wednesday.

This emphasis on avatars—digitally-generated visual personas—was showcased a day later when Zuckerberg appeared on AI researcher Lex Fridman’s podcast as a “codec avatar” for an interview. As Fridman marveled at the high-resolution, near photorealistic depictions of himself and Zuckerberg, the tech CEO explained that AI is an essential part of understanding the content and context of the metaverse… as well as central to future improvements to Facebook, Instagram, and other existing products.

Zuckerberg said his commitment to the metaverse remains firm despite continuing challenges surrounding the product and its core value proposition. Indeed, the strides made in the intervening months appear to have drawn Meta’s attention back to virtual worlds. One product the company is working on is a pair of “smart glasses” that include MetaAI, an AI companion that can communicate using voice, text, and even physical gestures.

In his interview with The Verge, Zuckerberg insisted that a lot of the work done on AI as part of Meta’s products remained “pretty basic,” but laid out how he envisioned AI’s functionality becoming more adaptive to users over time.

“You get to this point where there’s going to be 100 million AIs just helping businesses sell things, then you get the creator version of that, where every creator is going to want an AI assistant—something that can help them build their community,” he said. “Then I think that there’s a bunch of stuff that’s just interesting kind of consumer use cases.”

For example, AI chatbots that can help you cook, plan workouts, or build a vacation itinerary.

“I think that a bunch of these things can help you in your interactions with people,” he explained. “And I think that’s more our natural space.”

Zuckerberg suggested that there will be interactive AI profiles that operate more independently.

“Chat will be where most of the interaction happens, but these AIs are going to have profiles on Instagram and Facebook, and they’ll be able to post content, and they’ll be able to interact with people and interact with each other,” said the Meta CEO.

Zuckerberg also touched on the growing calls for government regulation over AI, and said he sees both sides of the issue—though he leans toward a more competitive view.

“I think that there is valuable stuff for the government to do, I think both in terms of protecting American citizens from harm and preserving I think what is a natural competitive advantage for the United States compared to other countries,” he said.

“I think this is just gonna be a huge sector, and it’s going to be important for everything, not just in terms of the economy, but there’s probably defense components and things like that,” he continued. “I think the US having a lead on that is important.”

But Meta’s AI ambitions have not been without critics.

Over the summer, researchers criticized Meta for claiming its Llama 2 AI system is an open-source product without acknowledging the licensing restrictions tied to it that make it less accessible. In a letter to Zuckerberg in June, a bipartisan duo of senators—Sens. Richard Blumenthal (D-CT) and Josh Hawley (R-MO)—raised concerns about Llama’s potential misuse by bad actors.

Zuckerberg acknowledged that “not everything we do is open source” at Meta, but said that much of its work is, and that his company leans “probably a little more open-source” than his competitors. He did acknowledge that the risk of making all of the code open source is that it could be abused.

In regard to AI safety, the CEO said that being as open as possible meant more scrutiny, which could lead to the creation of new industry standards that would be a “big advantage” on the security side.

Stay on top of crypto news, get daily updates in your inbox.

[ad_2]

Source link