[ad_1]

Microsoft Research has announced the release of Phi-2, a small language model (SLM) demonstrating remarkable capabilities for its size. Launched today, the model was first revealed during Microsoft’s Ignite 2023 event in which Satya Nadella highlighted its ability to achieve state-of-the-art performance with a fraction of the training data.

Unlike GPT, Gemini and other large language models (LLMs), a SLM is trained on a limited dataset, using fewer parameters but also requiring less computations to run. As a result, the model that cannot generalize as much as a large language model but can be very good and efficient at specific tasks—like math and calculations in the case of Phi.

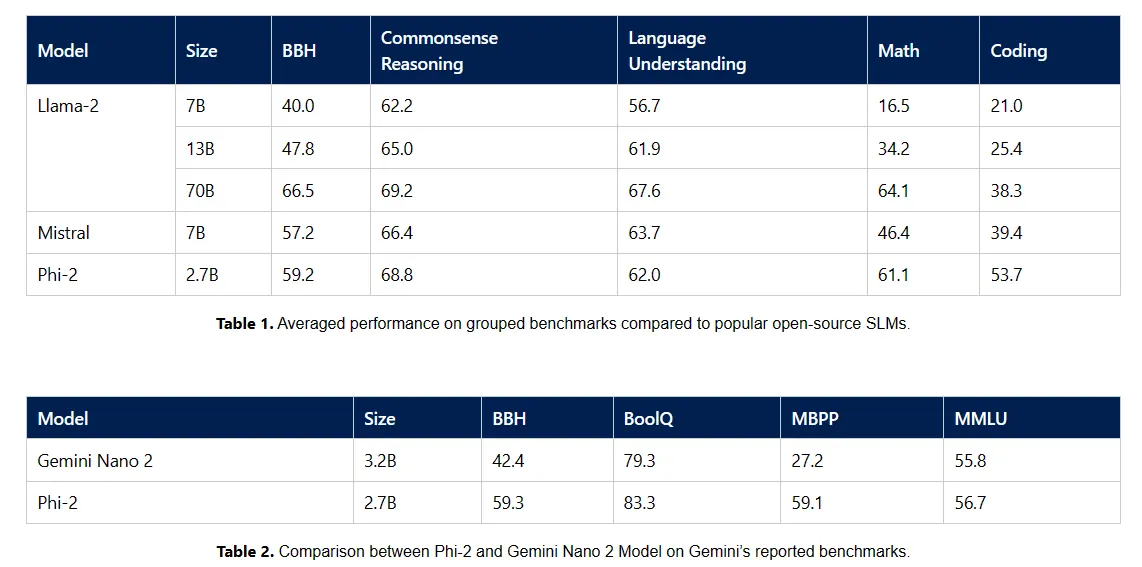

Phi-2, with its 2.7 billion parameters, showcases good reasoning and language understanding, rivaling models up to 25 times its size, according to Microsoft. This stems from Microsoft Research’s focus on high-quality training data and advanced scaling techniques, yielding a model that outperforms its predecessors in various benchmarks, including math, coding, and common sense reasoning.

“With only 2.7 billion parameters, Phi-2 surpasses the performance of Mistral and Llama-2 models at 7B and 13B parameters on various aggregated benchmarks,” Microsoft said, throwing in a low blow for Google’s newest AI model: “Furthermore, Phi-2 matches or outperforms the recently-announced Google Gemini Nano 2, despite being smaller in size.”

Gemini Nano 2 is Google’s latest bet on a multimodal LLM capable of running locally. It was announced as part of the Gemini family of LLMs that are expected to replace PaLM-2 in most of Google’s services.

Microsoft’s approach to AI goes beyond model development, however. The introduction of custom chips, Maia and Cobalt, as reported by Decrypt, show that the company is moving towards fully integrating AI and cloud computing. The computer chips, optimized for AI tasks, support Microsoft’s larger vision of harmonizing hardware and software capabilities and are in direct competition against Google Tensor and Apple’s new M-series of chips.

It is important to note that Phi-2 is such a small language model that it can be run locally on low-tier equipment, even potentially smartphones, which paves the way for new applications and use cases.

As Phi-2 enters the realm of AI research and development, its availability in the Azure AI Studio model catalog is also a step towards democratizing AI research. Microsoft is one of the most active companies contributing to open source AI development.

As the AI landscape continues to evolve, Microsoft’s Phi-2 is evidence that the world of AI Is not always about thinking bigger. Sometimes, the greatest power lies in being smaller and smarter.

Edited by Ryan Ozawa.

Stay on top of crypto news, get daily updates in your inbox.

[ad_2]

Source link