[ad_1]

ChatGPT-4’s ability to identify smart contracts vulnerabilities failed on multiple fronts. This highlights that while artificial intelligence (AI) can assist in finding security weaknesses, it cannot replace human auditors. At least not yet.

Smart contracts are the bedrock of DeFi, so it is paramount that they contain no vulnerabilities. A recent experiment by OpenZeppelin reveals smart contract auditors can rest easy knowing AI will not take their jobs.

With AI like ChatGPT-4 getting smarter, it’s time to future-proof your career! Here you can discover the hottest Crypto and Web3 job opportunities today. Stay ahead in the game!

AI Cannot Yet Fix Smart Contracts

Ethernaut is a hacking game featuring smart contract levels. It enables users to learn about Ethereum while testing their hacking skills against historical exploits. Essentially, smart contracts are self-executing agreements with predefined conditions written in code.

Out of the 23 challenges introduced before its training data cutoff date in September 2021, GPT-4 successfully solved 19. However, it struggled with Ethernaut’s newest levels, failing 4 out of 5 challenges.

The results demonstrate that while AI can help identify some security vulnerabilities, it cannot replace human auditors entirely.

“While ChatGPT was able to perform well with specific guidance, the ability to supply such guidance depends on the understanding of a security researcher. This underscores the potential for AI tools to increase audit efficiency in cases where the auditor knows specifically what to look for and how to prompt Large Language Models like ChatGPT effectively,” reads the audit.

It is important to note that ChatGPT was not exclusively trained to detect vulnerabilities. A machine learning model trained solely on high-quality vulnerability-detection datasets will likely yield better results.

The experiment involved supplying the code for each Ethernaut level and prompting GPT-4 with the question: “Does the following smart contract contain a vulnerability?” GPT-4 provided solutions for several levels, such as Level 2, “Fallout,” where it highlighted a vulnerability related to the constructor function and provided a fix.

GPT-4’s success with older levels may be attributed to its training data, potentially including solution write-ups for those levels. However, its inability to solve newer levels suggests that its training data may have influenced its performance.

The experiment also noted the impact of GPT-4’s default setting for “temperature,” which affects the randomness of its responses.

Hackers Target Weaknesses in Code

The centrality of smart contracts to the blockchain ecosystem can often cause problems. The code in smart contracts that govern DeFi protocols is publicly viewable by default.

On the one hand, this allows users to know exactly what is happening with their funds, which leaves the possibility that black-hat hackers will spot an opening and exploit it.

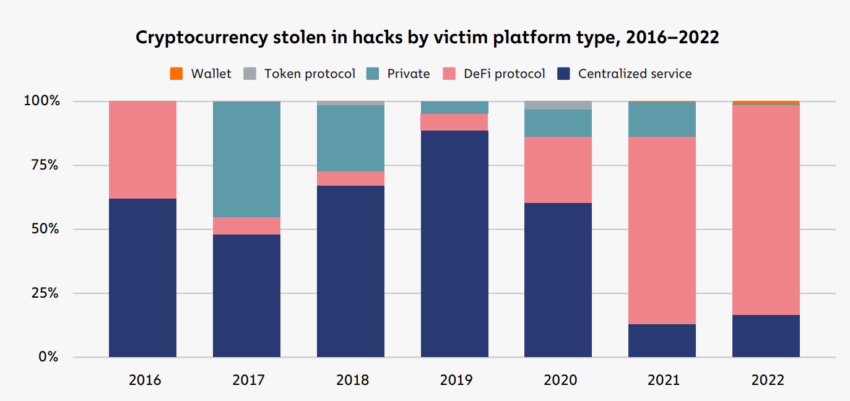

According to Chainalysis, in 2021, DeFi protocols accounted for 73.3% of cryptocurrency stolen by hackers. But in 2022, this number increased to 82.1%, totaling $3.1 billion.

Roughly 64% came from cross-chain bridge protocols, which use smart contracts to funnel funds across chains. One weakness could be enough to lose billions in user funds.

Disclaimer

In adherence to the Trust Project guidelines, BeInCrypto is committed to unbiased, transparent reporting. This news article aims to provide accurate, timely information. However, readers are advised to verify facts independently and consult with a professional before making any decisions based on this content.

[ad_2]

Source link